The Data Haze: Why Standardized Practices Are Key to Environmental Testing

Adopting Best Practices and Standardized Testing Can Sharpen Our Collective Focus on Real Solutions.

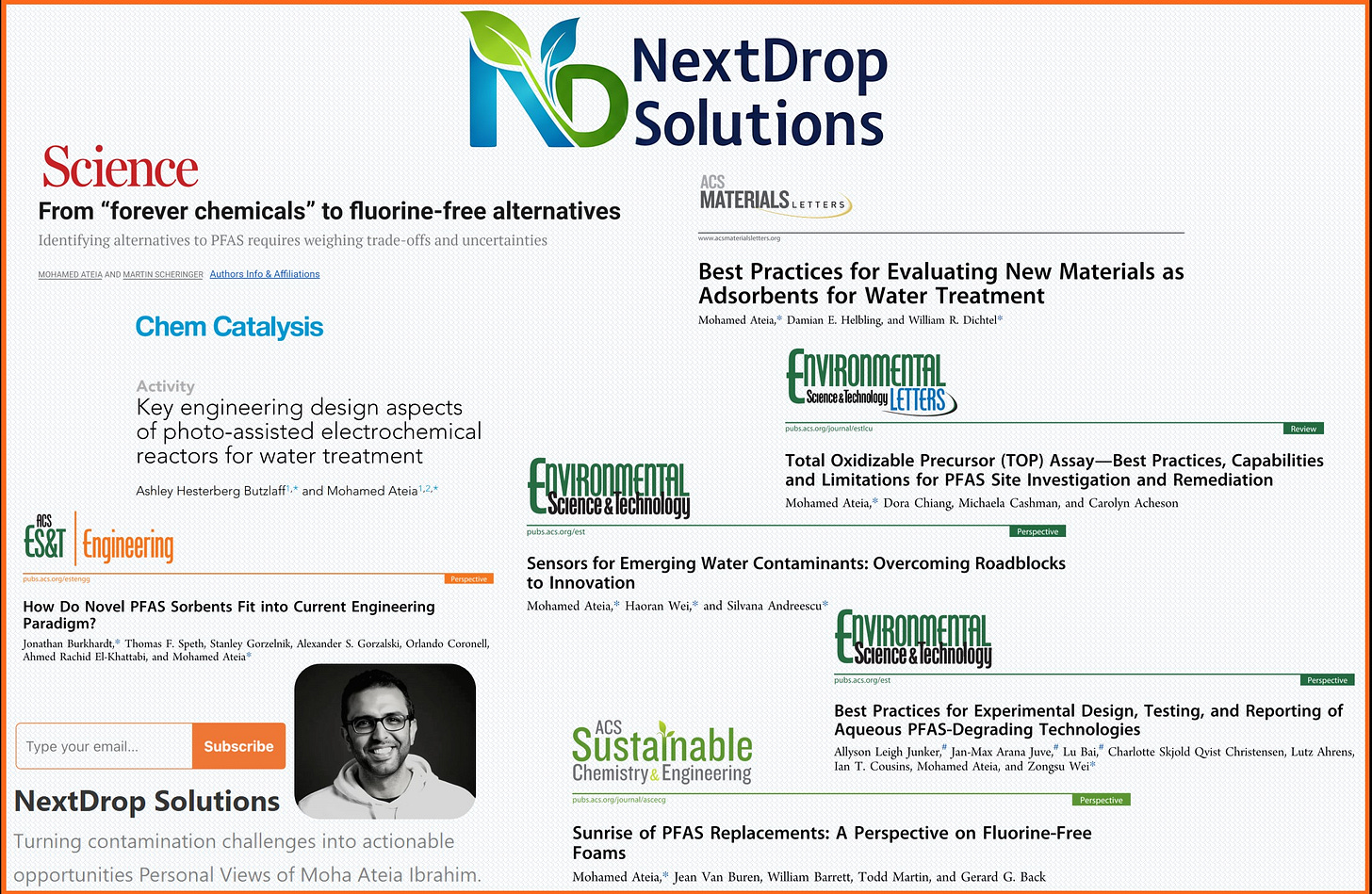

If you've followed my work over the years, you might have noticed a recurring theme in some of my publications – titles that include phrases like "Best Practices," "Perspectives," or calls for standardized reporting, particularly for complex challenges like PFAS analysis and treatment. This isn't a coincidence. It stems from a deep-seated conviction that our collective ability to tackle pressing environmental issues hinges critically on something fundamental: the way we measure, compare, and validate our findings.

It leads me to often think of our field using a particular metaphor: imagine a global orchestra where every musician has a slightly different tuning fork. Some are a fraction sharp, others a touch flat. They all play with immense skill and passion, but the resulting symphony, while perhaps beautiful in moments, lacks that perfect, powerful harmony. The true potential of their collective effort remains just out of reach.

This, I believe, mirrors a crucial challenge in environmental science and engineering today. As we confront pervasive contaminants like Per- and Polyfluoroalkyl Substances (PFAS), our community is driven by innovation and a shared urgency. Yet, if our fundamental methods for testing, measuring, and reporting aren't deeply harmonized – if we're not all working from a well-calibrated "score" – how effectively can we compare insights, validate breakthroughs, or build globally robust solutions?

This isn't merely an academic footnote; it's a practical impediment. From my experiences in advancing analytical tools like the Total Oxidizable Precursor (TOP) assay to contributing to guidance on evaluating new PFAS treatment technologies, a persistent question emerges: are we truly speaking the same scientific language, clearly and consistently?

The "Breakthrough" Mirage: When Apples Aren't Compared to Apples

Consider the frequent announcement of a "game-changing" technology for degrading a stubborn contaminant. Initial lab results often trumpet near-perfect removal, sparking excitement. But the critical questions that follow can reveal a landscape of uncalibrated yardsticks:

Was "removal" complete mineralization to benign substances, or merely transformation into other, possibly uncharacterized, byproducts? (A core issue my colleagues and I addressed in a recent perspective on reporting for aqueous PFAS-degrading technologies.)

Were lab conditions reflective of complex, real-world water matrices, or idealized scenarios?

Can energy consumption and material stability be fairly benchmarked against existing solutions?

Was the analytical rigor – from sampling QA/QC to the breadth of analytes (including precursors) – truly comparable to other leading studies?

When methodologies and reporting lack deep standardization, we end up with results that look similar but are fundamentally difficult to compare. This slows genuine progress, can misdirect investment, complicate regulatory efforts, and erode public trust when promised solutions don't scale or replicate as expected.

Why Harmonization is Non-Negotiable

The environmental threats we face demand a unified, rigorous, and fast response. Standardized testing and universally adopted best practices are the bedrock for:

Reliability & Reproducibility: The cornerstone of scientific advancement.

True Innovation Assessment: Allowing genuine comparison of new technologies against established ones based on effectiveness, lifecycle cost, and sustainability.

Informed Regulation: Providing policymakers with clear, comparable data.

Public Trust: Fostering confidence through consistent, verifiable scientific claims.

Accelerated Solutions: Building efficiently on a global foundation of shared, reliable knowledge.

The Hurdles: More Than Just Technical Details

Achieving this isn't simple. It involves navigating the rapid pace of discovery versus methodical validation, the academic pressures for novelty, proprietary interests in commercial R&D, the inherent complexity of environmental samples, and unequal access to advanced analytical resources. Acknowledging these systemic factors is crucial for fostering collective critical thinking about how we, as a field, can improve.

My Vision: Cultivating a Culture of Comparability

We don't need to stifle innovation with overly rigid prescriptions, especially in early-stage, exploratory research. However, as findings mature and solutions move towards application, a common framework for validation and reporting becomes indispensable.

My vision involves:

"Comparability by Design": Embedding consideration for how work will be understood and benchmarked globally from the earliest stages of research planning.

Elevating Method Harmonization: Stronger support for and engagement with bodies that develop and validate consensus-based standard methods, including rigorous inter-laboratory studies.

Valuing Validation and Comparative Research: Recognizing and incentivizing studies that critically evaluate and compare approaches using standardized protocols. My team, for example, has focused on refining tools like the TOP assay and developing guidance on reporting for PFAS degradation, aiming to contribute to this very need.

Fostering "Radical Transparency" in Reporting: Advocating for exhaustive disclosure of experimental details, robust QA/QC, and, where appropriate, open data to enable true meta-analysis.

Critical Thinking in Training: Instilling in the next generation of scientists and engineers not just the "how" of methods, but the profound "why" behind rigor and comparability.

The Unfiltered Imperative: Our Shared Responsibility

This is fundamentally a call for a deeper professional commitment to the principles that underpin all credible science. Our individual contributions achieve maximum impact only when they can be clearly understood, reliably reproduced, and fairly compared by the global community.

When we prioritize standardized testing and best practices, we build greater public trust, enable smarter policy, and, most importantly, accelerate the deployment of genuinely effective solutions.

The critical question for us all remains: How can our daily work, our research designs, our reporting habits, and our collaborations contribute to this essential harmony and ensure we are all, truly, playing from the same, well-tuned score?